flowchart LR

A["Gaze Data<br/>(eyetracker)"] -->

B["Cognitive Level Prediction Module"]

C["Face Images<br/>(webcam)"] --> D["Attentiveness Prediction Module<br/>(Convolutional Neural Network)"]

E["Code File"] --> F["Vulnerability Analysis<br/>(SonarQube)"]

B --> G["Vulnerabilty Selector Module"]

D --> G

F --> G

G --> H["Large Language Model<br/>(Custom Few-Shot prompt)"]

H --> I["Present Vulnerability Feedback to User"]

Cognitive-Aware Plugin for Vulnerability Feedback

TL;DR

We propose Cognitive-Aware Plugin for Vulnerability Feedback (CAPV), a Visual Studio Code plugin that integrates static analysis, real-time cognitive and attentiveness metrics from eye-tracking and webcams, and large language models to generate adaptive, personalized feedback for secure coding education.

Motivation

Prior Work: Large Language Models (LLM)

- Use to help teach students secure coding?

- Issues?

Prior Work: Other Approaches?

- Work has been done to study attention and cognitive patterns of students in classrooms from multi-modal data

- Adaptive micro-learning system for managing cognitive load [22]

- Opportunities?

- Incorporating cognitive/attention data for secure coding feedback

- Using cognitive/attention data with LLMs for generating tailored feedback

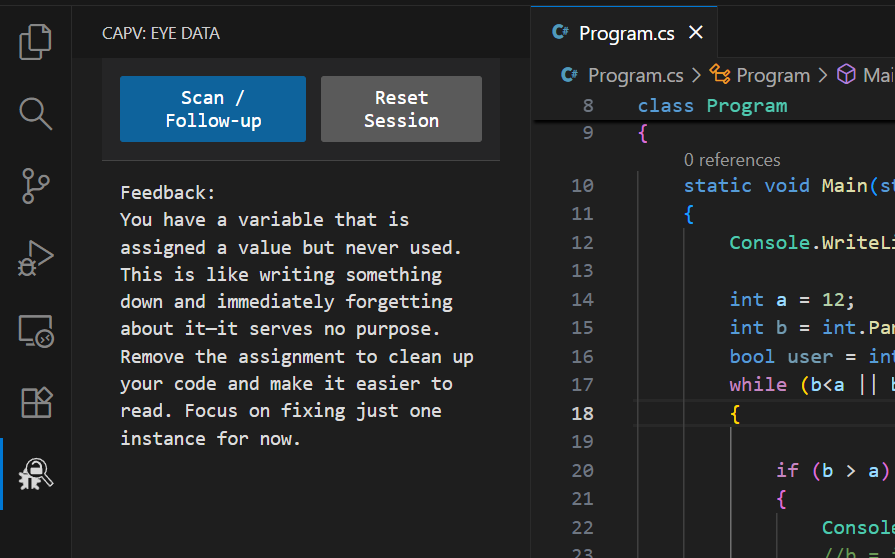

Proposed Approach: CAPV

- Cognitive-Aware Plugin for Vulnerability Feedback

- Effective software vulnerability feedback so programmers can learn to write more secure code

- Three components, inspired from existing research:

- Vulnerability Analysis using static analysis tools. Provides grounding to mitigate LLM hallucinations.

- Cognitive/Attention prediction using machine learning

- LLM to provide effective, tailored feedback

Theoretical Grounding

- Cognitive Load Theory [23]

- Reduce extraneous load by filtering raw outputs from static analysis

- Use cognitive/attentiveness prediction to match vulnerability difficulty to current working memory capacity

- Zone of Proximal Development [24]

- Use LLM to provide tailored scaffolding based on student expertise and engagement with tool

CAPV System Architecture

Figure: CAPV System Architecture Flowchart

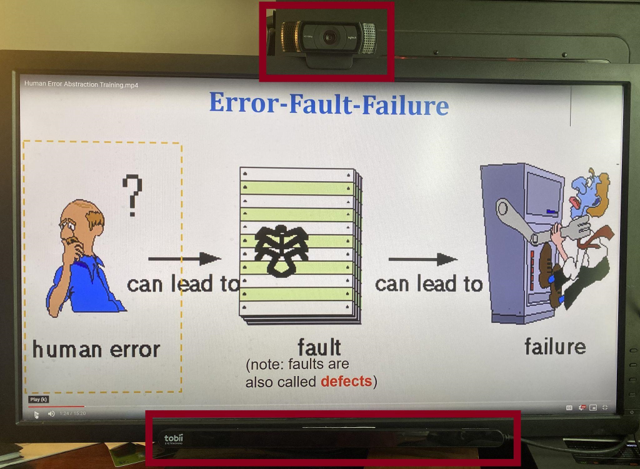

Example

Anticipated Outcomes

- Reductions in vulnerability introduction rates

- Improved efficiency in vulnerability detection and remediation

- Heightened user awareness and adoption of secure coding practices

Thank You!

Contact Information

👨💻 Andrew Sanders

References

References

[1]

A. Sanders, G. S. Walia, and A. Allen, “Analysis of Software Vulnerabilities Introduced in Programming Submissions Across Curriculum at Two Higher Education Institutions,” in 2024 IEEE Frontiers in Education Conference (FIE), Oct. 2024.

[2]

T. Yilmaz and Ö. Ulusoy, “Understanding security vulnerabilities in student code: A case study in a non-security course,” Journal of Systems and Software, vol. 185, p. 111150, Mar. 2022, doi: 10.1016/j.jss.2021.111150.

[3]

Joint Task Force on Computing Curricula, Association for Computing Machinery (ACM) and IEEE Computer Society, Computer Science Curricula 2013: Curriculum Guidelines for Undergraduate Degree Programs in Computer Science. New York, NY, USA: Association for Computing Machinery, 2013.

[4]

Department of Homeland Security, US-CERT, “Software Assurance.” Accessed: Jul. 11, 2023. [Online]. Available: https://www.cisa.gov/sites/default/files/publications/infosheet \_SoftwareAssurance.pdf

[5]

Verizon, “Verizon 2023 Data Breach Investigations Report,” Verizon Business. Accessed: Oct. 30, 2024. [Online]. Available: https://www.verizon.com/business/resources/reports/dbir/

[6]

John Zorabedian, “Veracode Survey Research Identifies Cybersecurity Skills Gap Causes and Cures,” Veracode. Accessed: Jul. 12, 2023. [Online]. Available: https://www.veracode.com/blog/security-news/veracode-survey-research-identifies-cybersecurity-skills-gap-causes-and-cures

[7]

J. Lam, E. Fang, M. Almansoori, R. Chatterjee, and A. G. Soosai Raj, “Identifying Gaps in the Secure Programming Knowledge and Skills of Students,” in Proceedings of the 53rd ACM Technical Symposium on Computer Science Education - Volume 1, in SIGCSE 2022, vol. 1. New York, NY, USA: Association for Computing Machinery, Feb. 2022, pp. 703–709. doi: 10.1145/3478431.3499391.

[8]

T. Gasiba, U. Lechner, J. Cuéllar, and A. Zouitni, “Ranking Secure Coding Guidelines for Software Developer Awareness Training in the Industry.” 2020. doi: 10.4230/OASIcs.ICPEC.2020.11.

[9]

Kenneth A. Williams, Xiaohong Yuan, Huiming Yu, and Kelvin Bryant, “Teaching secure coding for beginning programmers,” Journal of Computing Sciences in Colleges, vol. 29, no. 5, pp. 91–99, May 2014.

[10]

Dieter Pawelczak, “Teaching Security in Introductory C-Programming Courses,” 6th International Conference on Higher Education Advances (HEAd’20), Jun. 2020, doi: 10.4995/HEAd20.2020.11114.

[11]

Jun Zhu, Heather Richter Lipford, and Bill Chu, “Interactive support for secure programming education,” in Proceeding of the 44th ACM technical symposium on Computer science education, in SIGCSE ’13. New York, NY, USA: Association for Computing Machinery, Mar. 2013, pp. 687–692. doi: 10.1145/2445196.2445396.

[12]

H. Chi, E. L. Jones, and J. Brown, “Teaching Secure Coding Practices to STEM Students,” in Proceedings of the 2013 on InfoSecCD ’13: Information Security Curriculum Development Conference, in InfoSecCD ’13. New York, NY, USA: Association for Computing Machinery, Oct. 2013, pp. 42–48. doi: 10.1145/2528908.2528911.

[13]

M. Liu and F. M’Hiri, “Beyond Traditional Teaching: Large Language Models as Simulated Teaching Assistants in Computer Science,” in Proceedings of the 55th ACM Technical Symposium on Computer Science Education V. 1, in SIGCSE 2024. New York, NY, USA: Association for Computing Machinery, Mar. 2024, pp. 743–749. doi: 10.1145/3626252.3630789.

[14]

K. Tamberg and H. Bahsi, “Harnessing Large Language Models for Software Vulnerability Detection: A Comprehensive Benchmarking Study,” ArXiv, 2024, doi: 10.48550/ARXIV.2405.15614.

[15]

A. Sanders, G. S. and Walia, L. Cordova, and T. Mendoza, “Pedalogical: Feedback tool to reduce software vulnerabilities in non-security computer science courses,” in 2025 IEEE frontiers in education conference (FIE), 2025.

[16]

J. Prather et al., “The robots are coming: Exploring the implications of openai codex on introductory programming,” in Proceedings of the 2023 ACM conference on innovation and technology in computer science education, ACM, 2023, pp. 10–16.

[17]

S. MacNeil et al., “Experiences from using code explanations generated by large language models in a web software development e-book,” in Proceedings of the 54th ACM technical symposium on computer science education, ACM, 2023, pp. 931–937.

[18]

P. Denny, V. Kumar, and N. Giacaman, “Conversing with copilot: Exploring prompt engineering for solving cs1 problems using natural language,” in Proceedings of the 54th ACM technical symposium on computer science education, ACM, 2023, pp. 1136–1142.

[19]

A. Sanders, B. Boswell, G. S. Walia, and A. Allen, “Non-intrusive classroom attention tracking system (NiCATS),” in 2021 IEEE frontiers in education conference (FIE), 2021, pp. 1–9. doi: 10.1109/FIE49875.2021.9637411.

[20]

M. Elbawab and R. Henriques, “Machine learning applied to student attentiveness detection: Using emotional and non-emotional measures,” Education and Information Technologies, pp. 1–21, 2023, doi: 10.1007/s10639-023-11814-5.

[21]

N. Xie, Z. Liu, Z. Li, W. Pang, and B. Lu, “Student engagement detection in online environment using computer vision and multi-dimensional feature fusion,” Multimedia Systems, vol. 29, pp. 3559–3577, 2023, doi: 10.1007/s00530-023-01153-3.

[22]

B. Zhu, K. T. Chau, and N. A. M. Mokmin, “Optimizing cognitive load and learning adaptability with adaptive microlearning for in-service personnel,” Scientific Reports, vol. 14, 2024, doi: 10.1038/s41598-024-77122-1.

[23]

J. Sweller, J. J. van Merriënboer, and F. Paas, “Cognitive architecture and instructional design: 20 years later,” Educational Psychology Review, vol. 31, no. 2, pp. 261–292, 2019.

[24]

L. Vygotsky, Mind in society: Development of higher psychological processes. Cambridge: Harvard University Press, 1978.